Sign Language Recognition and Text Conversion

A system that recognizes sign language gestures using a Leap Motion depth sensor and converts them into text.

This project focuses on real-time sign language recognition using a Leap Motion depth sensor, enabling seamless translation of hand gestures into text. The system enhances accessibility for the hearing-impaired community by providing a non-intrusive and efficient communication interface.

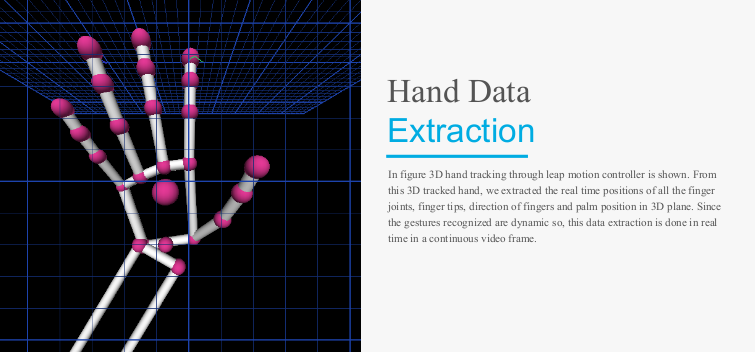

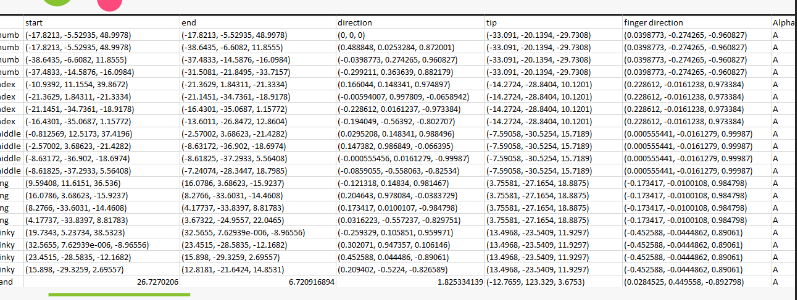

Left: Overview. Middle: 3d pose data. Right: collected dataset.

Features

- Depth Sensor-Based Hand Tracking: Utilizes the Leap Motion sensor to capture precise hand and finger movements.

- Sign Language Recognition: Implements machine learning models to classify gestures accurately.

- Real-Time Text Conversion: Converts recognized gestures into text output, facilitating communication.

- Interactive & Non-Intrusive: Works without gloves or markers, ensuring a natural user experience.

Challenges & Solutions

- Gesture Variability: Trained a robust deep learning model to handle variations in hand positioning and speed.

- Depth Data Processing: Optimized sensor data fusion for accurate tracking and classification.

- Latency Reduction: Applied lightweight CNN models for real-time performance.

Results

The system achieves high accuracy in recognizing sign language gestures and real-time text conversion, making it useful for assistive communication and education.