Human-Robot Interaction through Behavioral-Based Modeling

A robot designed to interact with humans by analyzing facial expressions and following them using behavioral-based modeling.

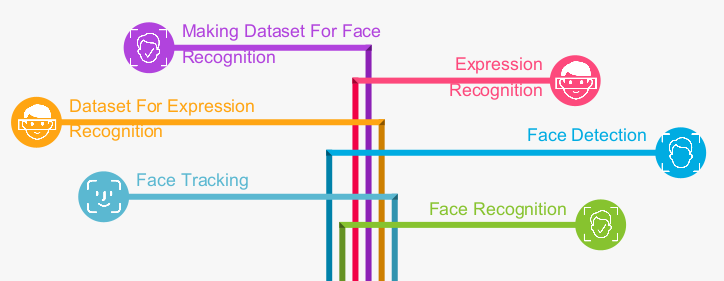

This project focuses on human-robot interaction (HRI) using behavioral-based modeling, allowing a robot to analyze human facial expressions and follow them accordingly.

The system integrates computer vision, deep learning, and robotic control to create an intuitive and interactive experience.

Features.

Features

- Facial Expression Recognition: Uses deep learning models to analyze human emotions in real-time.

- Human Tracking & Following: Implements pose estimation and motion tracking to follow a human smoothly.

- Behavioral-Based Decision Making: Robot adapts its actions based on human expressions and movement patterns.

- Real-Time Processing: Optimized inference pipeline for low-latency interaction.

Challenges & Solutions

- Real-Time Facial Expression Analysis: Used optimized CNN models for fast emotion detection.

- Accurate Human Tracking: Integrated pose estimation and depth sensing for reliable tracking.

- Smooth Movement Control: Applied PID control and reinforcement learning to achieve natural motion.

Results

The robot successfully interacts with humans, responding to facial expressions and movement cues in real-time, making it suitable for assistive robotics and social interaction.